Can I Use Gans For Lost Data

Direction Summary

In contempo years, neural networks have get the core engineering science in auto learning and AI. Besides the classical applications of data nomenclature and regression, they can besides exist used to create new, realistic information points. The established model architecture for data generation are so-called Generative Adversarial Networks (GANs). GANs consist of 2 neural networks: the generator network and the discriminator network. These 2 networks are iteratively trained confronting each other. The generator tries to create a realistic data point, while the discriminator learns to distinguish existent and constructed data points. In this Minimax game, the generator improves its functioning until the generated data points can no longer exist distinguished from real data points.

The generation of synthetic data using GANs has a variety of heady applications in practice:

- Image Synthesis The first successes of GANs were achieved based on prototype data. Today, specific GAN architectures tin generate images of faces that are inappreciably distinguishable from existent ones.

- Music Synthesis Likewise pictures, GAN models can also be used to generate music. In music synthesis, promising results have been achieved both for the creation of realistic waveforms and for the creation of entire melodies.

- Super Resolution Some other example of the successful utilise of GANs is Super-Resolution. Here, an attempt is made to improve the resolution of an image or to increase the size of the paradigm without loss. Using GANs, sharper transitions betwixt different image areas can be achieved than with, e.g., interpolation, and the general image quality can be improved.

- Deepfakes Using deepfake algorithms, faces in images and video recordings can be replaced by other faces. The algorithm is trained on as much information of the target person equally possible. Afterward, a third person's facial expressions can exist transferred to the person to be imitated, and a "deepfake" is created.

- Generating Additional Training Data GANs are also used to grow training information (also called augmentation), oft only available in small amounts in machine learning. A GAN is calibrated on the preparation data set and so that afterward, any number of new training examples can be generated. The goal is to better the performance or generalizability of ML models with the additionally generated data.

- Anonymization of Information Finally, GANs can besides exist used to brand information anonymous. This is a highly relevant utilise case, particularly for data sets containing personal data. The advantage of GANs over other information anonymization approaches is that the statistical backdrop of the data set are preserved. This, in turn, is important for the performance of other automobile learning models that are trained on this information. Since the processing of personal data is a meaning hurdle in automobile learning today, GANs for data gear up anonymization are a promising approach and have the potential to open the door for companies to procedure personal information.

In this commodity, I am commencement going to explain how GANs work in general. Afterward, I will talk over several use cases that can be implemented with the help of GANs, and to sum up, I will present current trends that are emerging in the area of generative networks.

Introduction

The most remarkable progress in artificial intelligence in recent years has been achieved through the awarding of neural networks. These have proven to be an extremely reliable approach to regression and nomenclature, peculiarly when processing unstructured data, such as text or images. A well-known example is the nomenclature of images into two or more groups. On the and then-called ImageNet dataset [ane] with a total of 1000 classes, neural networks attain a pinnacle 5 accuracy of 98.7%. This means that for 98.seven% of the images, the correct class is included in the v best model predictions. In recent years, groundbreaking results take also been achieved in natural speech communication processing by applying neural networks. For example, the Transformer architecture has been used very successfully for various speech communication processing problems such equally question answering, named entity recognition, and sentiment analysis.

A less well-known fact is that neural networks can likewise be used to larn the underlying distribution of a data set up to generate new, realistic case data. Lately, Generative Adversarial Networks (GANs) have established themselves equally a model architecture for this problem. Using them makes it possible to generate synthetic data points with the same statistical backdrop as the underlying training data. This opens up many compelling use cases, some of which are presented beneath.

In this article, I am first going to explain how GANs work in general. Later, I will discuss several use cases that can be implemented with the help of GANs, and then sum upwardly, I will present current trends that are emerging in the surface area of generative networks.

Generative Adversarial Networks

GANs are a new class of algorithms in machine learning. Equally explained above, they are models that can generate new, realistic data points afterward being trained on a specific data set. GANs basically consist of two neural networks that are responsible for particular tasks in the learning process. The generator is responsible for transforming a randomly generated input into a realistic sample of the distribution, which has to exist learned. In contrast, the discriminator is responsible for distinguishing existent from generated information points. A simple analogy is the interplay of a forger and a policeman. The counterfeiter tries to create counterfeit coins that are every bit realistic equally possible, while the policeman wants to distinguish the counterfeits from real coins. The amend the counterfeiter'due south replicas are, the more than reliable the policeman must exist at distinguishing the coins to recognize the counterfeit copies.

At his indicate, the forger has to better his skills fifty-fifty farther to fool the policeman, who has now improved his experience. It is evident that both the counterfeiter and the policeman become improve in their respective tasks in the course of this procedure. This iterative process also forms the footing for training the GAN models. Hither, the forger corresponds to the generator, while the policeman takes on the discriminator's role.

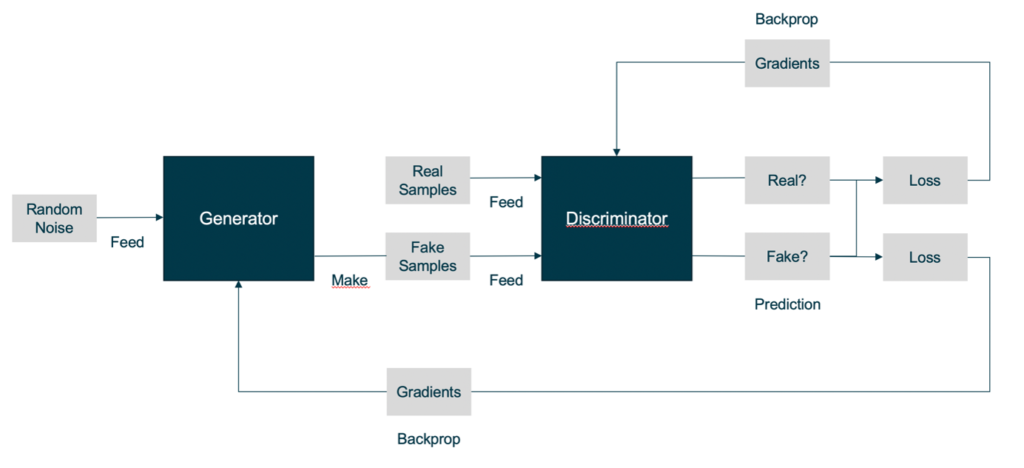

How are generator and discriminator networks trained?

In the start step, the discriminator is fixed. This ways that in this step, no adjustments of the parameters are made for the discriminator network. The generator is then trained for a sure number of grooming steps. The generator is trained using backpropagation, as usual, for neural networks. Its goal is that the "fake" outputs resulting from the random input are classified as real examples by the current discriminator. It is of import to mention that the grooming progress depends on the current state of the discriminator.

In the 2d step, the generator is fixed, and only the discriminator is trained by processing both real and generated examples with corresponding labels every bit training input. The goal of the training process is that the generator learns to create examples that are and so realistic that the discriminator cannot distinguish them from real ones. For a meliorate understanding, the GAN grooming process is shown schematically in figure 1.

Challenges in the grooming process

Due to the nature of the alternate training procedure, various problems can occur when training a GAN. A common challenge is that the discriminator's feedback gets worse, the amend the generator gets during the training.

You can imagine the process equally follows: If the generator tin can generate examples that are indistinguishable from real examples, the discriminator has no selection simply to approximate from which class the respective case comes. So if yous practise non terminate the training in time, the quality of the generator and the discriminator may decrease again due to the random render values of the discriminator.

Another common trouble is the so-chosen "mode collapse". This occurs when the network, instead of learning the properties of the underlying information, remembers single examples of this data or generates merely examples with low variability. Some approaches to counteract this trouble are to process several examples simultaneously (in batches) or to testify by examples simultaneously so that the discriminator can quantify the examples' lack of distinctiveness.

Use Cases for GANs

The generation of synthetic information using GANs has a diverseness of exciting applications in practice. The first impressive results of GANs were achieved based on image information, closely followed past the generation of audio data. However, in recent years, GANs take also been successfully practical to other data types, such as tabular data. In the post-obit, selected applications are presented.

1. Image Synthesis

One of the all-time-known applications of GANs is image synthesis. Here a GAN is trained based on an extensive paradigm data set. Thereby the generator network learns the of import common features and structures of the images. One case is the generation of faces. The portraits in figure 2 were generated using special GAN networks, which use convolutional neural networks (CNN) optimized for image data. Of course, other types of images can also exist generated using GANs, such equally handwritten characters, photos of objects, or houses. The bones requirement for good results is a sufficiently big data set.

A practical use case of this technology can be found in online retail. Here, GAN is used to create photos of models in specific garments or poses.

2. Music Synthesis

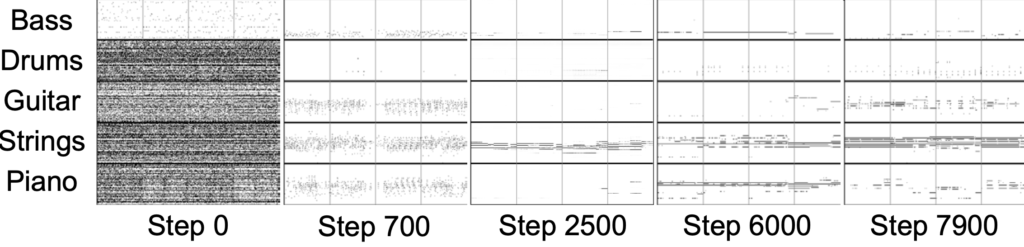

In dissimilarity to the creation of images, music synthesis contains a temporal component. Since audio waveforms are very periodic, and the human ear is very sensitive to deviations of these waveforms, maintaining signal periodicity is highly of import for GANs that generate music. This has led to GAN models that generate the magnitudes and frequencies of sound instead of the waveform. Examples of GANs for music synthesis are the GANSynth [3], which is optimized for the generation of realistic waveforms, and the MuseGAN [4] – itself specialized for the generation of whole melody sequences. On the internet, you tin notice various sources presenting music pieces generated by GANs. [v, half dozen] It is expected that GANs volition lead to a high level of disruption in the music industry in the next few years.

Figure iii shows excerpts of the music generated by MuseGAN for unlike training steps. At the commencement of the training (footstep 0), the output is purely random, and in the final step (step 7900), you can encounter that the bass plays a typical bassline, and the strings play mainly held chords.

3. Super Resolution

The procedure of recovering a high-resolution paradigm from a lower-resolution epitome is called super-resolution. The difficulty hither is that in that location are many possible solutions to recover the prototype.

A classic approach to super-resolution is interpolation. Here, the pixels missing for the higher resolution are derived from the neighboring pixels using a given function. Typically, yet, a lot of detail is lost, and the transitions between different parts of the image become blurred. Since GANs are good at learning the main features of image data, they are also a suitable approach to the problem of super-resolution. Here, the generator network no longer uses a random input merely the low-resolution prototype. The discriminator so learns to distinguish generated college resolution images from the existent higher resolution images. The trained GAN network tin then be used on new low-resolution images.

A State of the art GAN for super-resolution is the SRGAN [7]. Figure 4 shows the results for different super-resolution approaches for an instance epitome. The SRGAN (2nd image from the right) provides the sharpest consequence. Especially details like water drops or the surface structure of the headdress are successfully reconstructed past the SRGAN.

Illustration iv – Instance Super-Resolution for 4x Upscaling (from left to right: bicubic interpolation, SRResNet, SRGAN, original prototype) [vii]

4. Deepfakes: Audio and Video

Using deepfake algorithms, faces in images and video recordings can be replaced by other faces. The results are at present so expert that the deepfakes are hard to distinguish from real recordings. To create a deepfake, the algorithm is first trained on as much information of the target person as possible then that a tertiary person'due south facial expressions can exist transferred to the imitated person. Figure 5 shows the earlier-and-later comparison of a deepfake. The face up of actor Matthew McConaughey (left) was replaced by tech entrepreneur Elon Musk (correct).

This technique tin also exist applied to audio information, for example. Hither, the model is trained to a target voice, and then the voice in the original recording is replaced by the target voice.  Effigy 5 – Deepfake Sample Images [eight]

Effigy 5 – Deepfake Sample Images [eight]

five. Generation of Boosted Training Data

To train deep neural networks with many parameters, huge amounts of data are ordinarily required. Oft it is difficult or even impossible to obtain or collect a sufficiently large corporeality of data. One approach to achieve a good result with less information is data augmentation. This involves slightly modifying existing data points to create new preparation examples. This is often applied in estimator vision, for case, by rotating images or using the zoom to derive new image sections. A more than recent arroyo to data augmentation is the utilise of GANs. The idea is that GANs learn the distribution of training data, and thus theoretically, an infinite number of new examples can exist generated. For case, this approach has been successfully implemented to detect diseases in tomographic images [nine]. GANs can thus generate new grooming data for issues where there is non plenty data available for training.

In many cases, the collection of large amounts of data is also associated with considerable costs, especially if the data has to exist manually marked for grooming. In these situations, GANs can be used to reduce the price of boosted information acquisition.

Imbalanced datasets are another technical problem in the preparation of machine learning models where GANs tin help. These are datasets with different classes that are represented with unlike frequencies. To get more training data of the underrepresented grade, a GAN can exist trained for this form to generate more than synthetically generated information points, which are and so used in training. For example, there are significantly fewer microscope images of cancer-afflicted cells than good for you ones. In this case, GANs make it possible to railroad train better models for detecting cancer cells and can thus back up physicians in the diagnosis of cancer.

half-dozen. Anonymization of Data

Some other exciting awarding of GANs is the anonymization of data sets. Classical approaches to anonymization are the removal of identifier columns or the random changing of their values. Resulting problems are, for case, that with the appropriate prior cognition, conclusions can still exist fatigued about the persons behind the personal information. Irresolute the statistical properties of the information set past missing or modifying certain information tin can also reduce the usefulness of the information. GANs can likewise be used to generate bearding information sets. They are trained and then that the personal data in the generated data prepare can no longer exist identified, simply models can still be trained similarly well [x]. This consistent quality of the models tin be explained past the fact that the underlying statistical properties of the original information set up are learned by the GAN and thus preserved.

Anonymization using GANs tin can be applied not only to tabular data just too specifically to images. Faces or other personal features/elements on the prototype are replaced by generated variants. This allows us to train calculator vision models with realistic looking information without existence confronted with privacy issues. Ofttimes, the models' quality is significantly reduced if important features of an image, such as a face, are pixelated or blurred in the training procedure.

Conclusion and outlook

By using GANs, the distribution of data sets of any kind can be learned. Every bit explained above, GANs have already been successfully applied to various problems. Since GANs were only discovered in 2014 and have proven great potential, they are currently being researched very intensively. The solution to the issues mentioned above during training, such as "mode collapse", are widely used in research. Amid other things, research is being conducted on culling loss functions and by and large stabilizing training procedures. Some other active research area is the convergence of GAN networks. Due to the generator and discriminator's progress during the grooming process, it is very of import to stop the grooming at the right time to not railroad train the generator further on bad discriminator results. To stabilize the training, inquiry is also being done on approaches to add noise to the discriminator inputs to limit the discriminator'southward aligning during training.

A modified approach to generate new training data are Generative Teaching Networks. Here, the focus of the training is non primarily on learning how to distribute the data, just 1 tries to learn straight which data drives the training the fastest without necessarily prescribing similarity to the original information. 11] Using handwritten numbers as an example, information technology could be shown that neural networks can acquire faster with these artificial input data than with the original data. This arroyo tin can as well be applied to data other than image data. In the field of anonymization, it has so far been possible to reliably generate parts of a information prepare with personality protection guarantees. These anonymization networks can exist further developed to cover more types of data sets.

In the field of GANs, theoretical progress is being fabricated very quickly and volition before long detect its mode into do. Since the processing of personal data is a major hurdle in machine learning today, GANs for the anonymization of information sets are a promising approach and take the potential to open the door for companies to process personal information.

Resources

- http://www.epitome-net.org/

- https://arxiv.org/abs/1710.10196

- https://openreview.internet/pdf?id=H1xQVn09FX

- https://arxiv.org/abs/1709.06298

- https://salu133445.github.io/musegan/results

- https://storage.googleapis.com/magentadata/papers/gansynth/index.html

- https://arxiv.org/abs/1609.04802

- https://github.com/iperov/DeepFaceLab

- https://arxiv.org/abs/1803.01229

- https://arxiv.org/abs/1806.03384

- https://eng.uber.com/generative-educational activity-networks/

Can I Use Gans For Lost Data,

Source: https://www.statworx.com/en/content-hub/blog/generative-adversarial-networks-how-data-can-be-generated-with-neural-networks/

Posted by: fanninextre1983.blogspot.com

0 Response to "Can I Use Gans For Lost Data"

Post a Comment